So many tech guys already share how to install the OLLAM. I wont say too details. Just a brief step for you.

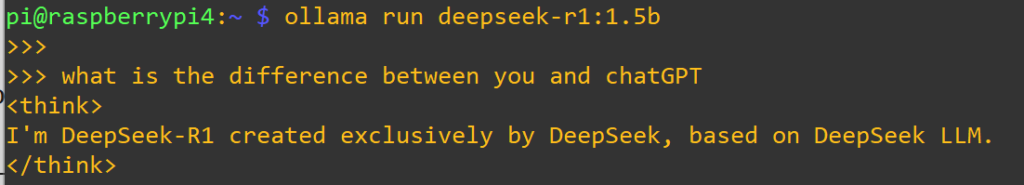

- Prepare a Machine with good GPU, CPU and > 16G RAM. (Raspberry Pi can run with the Deepseek 1.5B, other………. Please chec my last post)

- Install update your linux repos.

sudo apt-get update -y

sudo apt-get upgrade -y - Install Ollam by the follow command

curl -fsSL https://ollama.com/install.sh | sh - Run the LLM model, if you wont have the model at your machine, it will be download automatically.

ollama run <model>

e.g.: ollama run deepseek-r1:8b - The model will be downloaded to /usr/share/ollama/.ollama/models/

- what model you can run? Check here

https://ollama.com/search - OLLAMA command line is a little bit similar with Docker, check this.

PS: You also can install OLLAMA at WINDOWS, please also check OLLAMA website.

Lets try your own AI locally!

#OLLAMA #Model #AI #CPU #GPU #CUDB #RAM #RaspberryPI #Docker